Hugging Face Hub 支持所有文件格式,但具有 GGUF 格式的内置功能,GGUF 是一种二进制格式,针对快速加载和保存模型进行了优化,使其能够高效地用于推理目的。 GGUF 设计用于与 GGML 和其他执行器一起使用。 GGUF 由 @ggerganov 开发,他也是流行的 C/C++ LLM 推理框架 llama.cpp 的开发者。最初在 PyTorch 等框架中开发的模型可以转换为 GGUF 格式,以便与这些引擎一起使用。

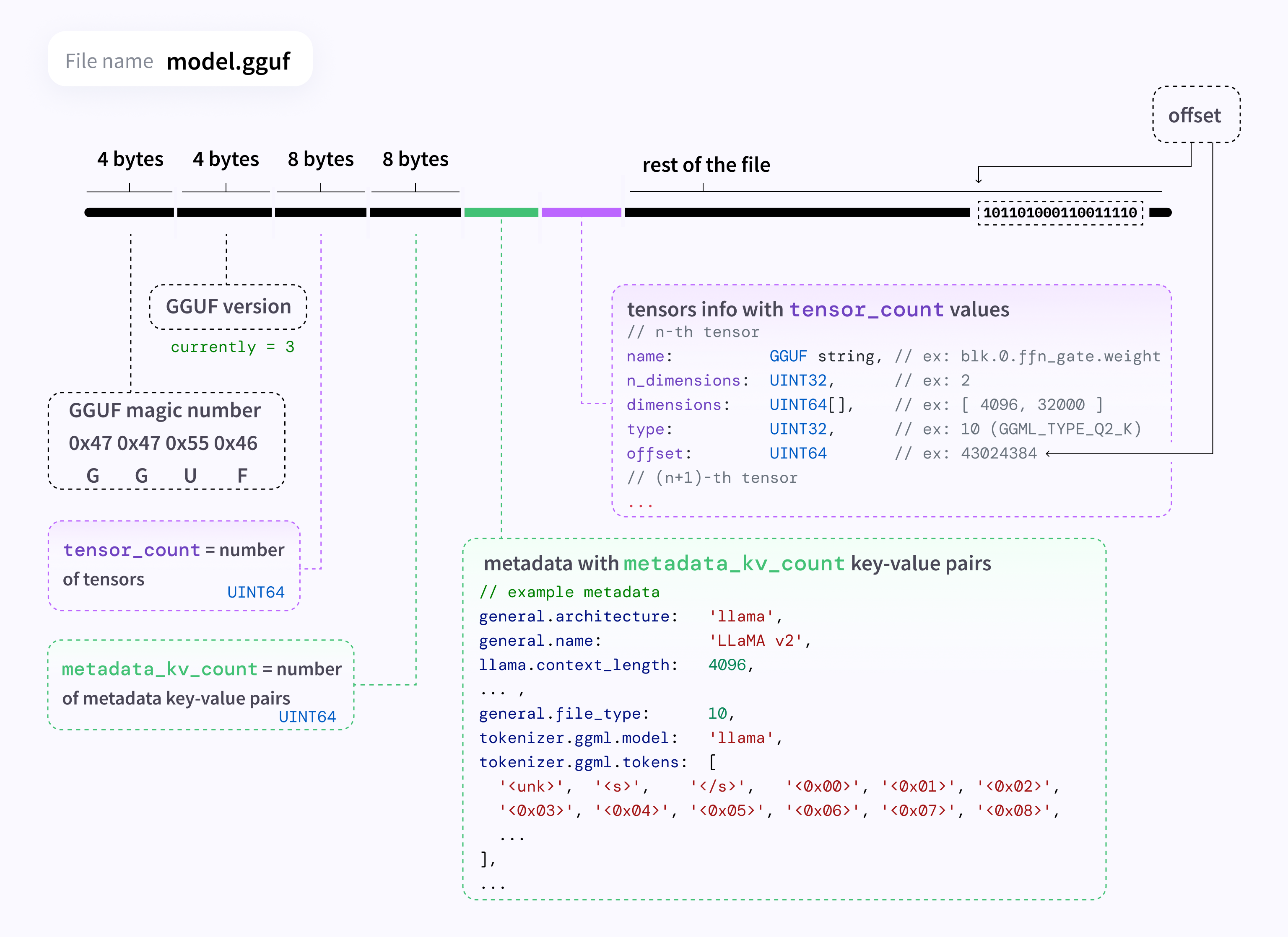

正如我们在此图中看到的,与仅张量的文件格式(例如 safetensors)不同(这也是 Hub 的推荐模型格式),GGUF 对张量和一组标准化元数据进行编码。

Quantization Types

| type | source | description |

|---|---|---|

| F64 | Wikipedia | 64-bit standard IEEE 754 double-precision floating-point number. |

| I64 | GH | 64-bit fixed-width integer number. |

| F32 | Wikipedia | 32-bit standard IEEE 754 single-precision floating-point number. |

| I32 | GH | 32-bit fixed-width integer number. |

| F16 | Wikipedia | 16-bit standard IEEE 754 half-precision floating-point number. |

| BF16 | Wikipedia | 16-bit shortened version of the 32-bit IEEE 754 single-precision floating-point number. |

| I16 | GH | 16-bit fixed-width integer number. |

| Q8_0 | GH | 8-bit round-to-nearest quantization (q). Each block has 32 weights. Weight formula: w = q * block_scale. Legacy quantization method (not used widely as of today). |

| Q8_1 | GH | 8-bit round-to-nearest quantization (q). Each block has 32 weights. Weight formula: w = q * block_scale + block_minimum. Legacy quantization method (not used widely as of today) |

| Q8_K | GH | 8-bit quantization (q). Each block has 256 weights. Only used for quantizing intermediate results. All 2-6 bit dot products are implemented for this quantization type. Weight formula: w = q * block_scale. |

| I8 | GH | 8-bit fixed-width integer number. |

| Q6_K | GH | 6-bit quantization (q). Super-blocks with 16 blocks, each block has 16 weights. Weight formula: w = q * block_scale(8-bit), resulting in 6.5625 bits-per-weight. |

| Q5_0 | GH | 5-bit round-to-nearest quantization (q). Each block has 32 weights. Weight formula: w = q * block_scale. Legacy quantization method (not used widely as of today). |

| Q5_1 | GH | 5-bit round-to-nearest quantization (q). Each block has 32 weights. Weight formula: w = q * block_scale + block_minimum. Legacy quantization method (not used widely as of today). |

| Q5_K | GH | 5-bit quantization (q). Super-blocks with 8 blocks, each block has 32 weights. Weight formula: w = q * block_scale(6-bit) + block_min(6-bit), resulting in 5.5 bits-per-weight. |

| Q4_0 | GH | 4-bit round-to-nearest quantization (q). Each block has 32 weights. Weight formula: w = q * block_scale. Legacy quantization method (not used widely as of today). |

| Q4_1 | GH | 4-bit round-to-nearest quantization (q). Each block has 32 weights. Weight formula: w = q * block_scale + block_minimum. Legacy quantization method (not used widely as of today). |

| Q4_K | GH | 4-bit quantization (q). Super-blocks with 8 blocks, each block has 32 weights. Weight formula: w = q * block_scale(6-bit) + block_min(6-bit), resulting in 4.5 bits-per-weight. |

| Q3_K | GH | 3-bit quantization (q). Super-blocks with 16 blocks, each block has 16 weights. Weight formula: w = q * block_scale(6-bit), resulting. 3.4375 bits-per-weight. |

| Q2_K | GH | 2-bit quantization (q). Super-blocks with 16 blocks, each block has 16 weight. Weight formula: w = q * block_scale(4-bit) + block_min(4-bit), resulting in 2.5625 bits-per-weight. |

| IQ4_NL | GH | 4-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix. |

| IQ4_XS | HF | 4-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 4.25 bits-per-weight. |

| IQ3_S | HF | 3-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 3.44 bits-per-weight. |

| IQ3_XXS | HF | 3-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 3.06 bits-per-weight. |

| IQ2_XXS | HF | 2-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 2.06 bits-per-weight. |

| IQ2_S | HF | 2-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 2.5 bits-per-weight. |

| IQ2_XS | HF | 2-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 2.31 bits-per-weight. |

| IQ1_S | HF | 1-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 1.56 bits-per-weight. |

| IQ1_M | GH | 1-bit quantization (q). Super-blocks with 256 weights. Weight w is obtained using super_block_scale & importance matrix, resulting in 1.75 bits-per-weight. |

Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

|---|---|---|---|---|---|

| llama-2-13b-chat.ggmlv3.q2_K.bin | q2_K | 2 | 5.51 GB | 8.01 GB | New k-quant method. Uses GGML_TYPE_Q4_K for the attention.vw and feed_forward.w2 tensors, GGML_TYPE_Q2_K for the other tensors. |

| llama-2-13b-chat.ggmlv3.q3_K_S.bin | q3_K_S | 3 | 5.66 GB | 8.16 GB | New k-quant method. Uses GGML_TYPE_Q3_K for all tensors |

| llama-2-13b-chat.ggmlv3.q3_K_M.bin | q3_K_M | 3 | 6.31 GB | 8.81 GB | New k-quant method. Uses GGML_TYPE_Q4_K for the attention.wv, attention.wo, and feed_forward.w2 tensors, else GGML_TYPE_Q3_K |

| llama-2-13b-chat.ggmlv3.q3_K_L.bin | q3_K_L | 3 | 6.93 GB | 9.43 GB | New k-quant method. Uses GGML_TYPE_Q5_K for the attention.wv, attention.wo, and feed_forward.w2 tensors, else GGML_TYPE_Q3_K |

| llama-2-13b-chat.ggmlv3.q4_0.bin | q4_0 | 4 | 7.32 GB | 9.82 GB | Original quant method, 4-bit. |

| llama-2-13b-chat.ggmlv3.q4_K_S.bin | q4_K_S | 4 | 7.37 GB | 9.87 GB | New k-quant method. Uses GGML_TYPE_Q4_K for all tensors |

| llama-2-13b-chat.ggmlv3.q4_K_M.bin | q4_K_M | 4 | 7.87 GB | 10.37 GB | New k-quant method. Uses GGML_TYPE_Q6_K for half of the attention.wv and feed_forward.w2 tensors, else GGML_TYPE_Q4_K |

| llama-2-13b-chat.ggmlv3.q4_1.bin | q4_1 | 4 | 8.14 GB | 10.64 GB | Original quant method, 4-bit. Higher accuracy than q4_0 but not as high as q5_0. However has quicker inference than q5 models. |

| llama-2-13b-chat.ggmlv3.q5_0.bin | q5_0 | 5 | 8.95 GB | 11.45 GB | Original quant method, 5-bit. Higher accuracy, higher resource usage and slower inference. |

| llama-2-13b-chat.ggmlv3.q5_K_S.bin | q5_K_S | 5 | 8.97 GB | 11.47 GB | New k-quant method. Uses GGML_TYPE_Q5_K for all tensors |

| llama-2-13b-chat.ggmlv3.q5_K_M.bin | q5_K_M | 5 | 9.23 GB | 11.73 GB | New k-quant method. Uses GGML_TYPE_Q6_K for half of the attention.wv and feed_forward.w2 tensors, else GGML_TYPE_Q5_K |

| llama-2-13b-chat.ggmlv3.q5_1.bin | q5_1 | 5 | 9.76 GB | 12.26 GB | Original quant method, 5-bit. Even higher accuracy, resource usage and slower inference. |

| llama-2-13b-chat.ggmlv3.q6_K.bin | q6_K | 6 | 10.68 GB | 13.18 GB | New k-quant method. Uses GGML_TYPE_Q8_K for all tensors - 6-bit quantization |

| llama-2-13b-chat.ggmlv3.q8_0.bin | q8_0 | 8 | 13.83 GB | 16.33 GB | Original quant method, 8-bit. Almost indistinguishable from float16. High resource use and slow. Not recommended for most users. |

Note: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.